When they go deep, we go wide: Why almost everyone is getting marketing science wrong

2571 words | ~13 min

I'm going to start the new year off on a controversial note – not with a prediction (predictions are overrated) but with an observation. I think most of the chatter and hype about data science in marketing is looking in the wrong direction.

This is a bit of a long read, so bail out now or brace yourself.

I've worked in marketing analytics, marketing technology, digital marketing and media for the last decade. I've built DMPs, analytics stacks, BI tools, planning automation systems and predictive modelling tools, and more than my fair share of planning processes. I am, it's fair to say, a marketing data nerd, and have been since back when jumping from strategy to analytics was considered a deeply weird career move.

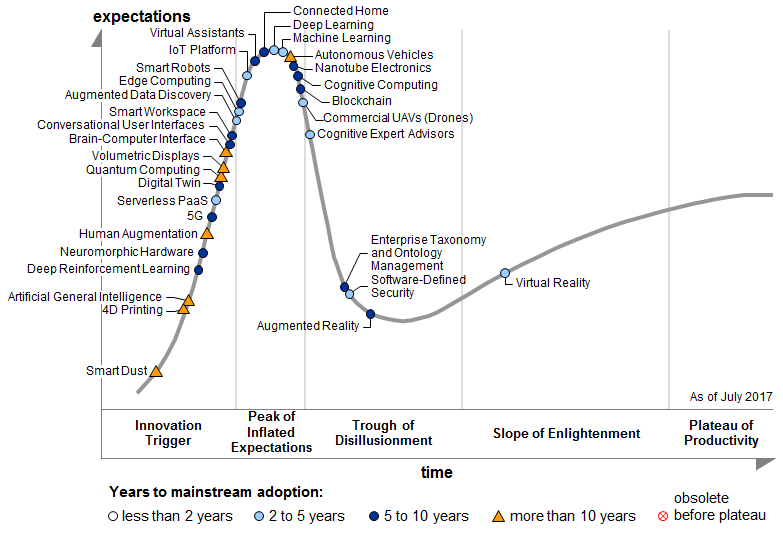

My discipline has become, slowly-then-quickly, the focus of everyone's attention. The industry buzzwords for the last few years have been big data, analytics, machine learning and AI. We're starting to get to grips with the social and political implications of widespread data collection by large companies. All of this makes data-driven marketing better and more accountable (which it badly needs). But all of this attention - the press coverage, the frenzied hiring, the sales pitching from big tech companies, all of it – has a bias built into it, that means talented data scientists are working on the wrong problems.

The bias is the false assumption that you can do the most valuable data science work in the channels that have the most data. That sounds self-evident, right? But it is, simply, not true. We believe it's true because we confuse the size and granularity of data sets with the value we can derive from analysing them.

Happiness is not just a bigger data set

We're used to the idea that more data equals better data science, and therefore that by focusing on the most data-rich marketing channels, you will get the best results. We are told this every day and it is a simple, attractive story. But the biggest gains in marketing science come from knowing where to be, when to appear and what to say, not how to optimise individual metrics in individual channels. Knowing this can drive not just marginal gains but millions of pounds of additional profit for businesses.

This makes lots of people deeply uncomfortable, because it attacks one of the fundamental false narratives in marketing science: that the road to better marketing science is through richer single-source data. This narrative is beloved of tech companies, but it comes from an engineering culture, not a data science culture. Engineers, rightly, love data integrity. Data scientists are able to find value from data in the absence of integrity, by bringing a knowledge of probability and statistics that lets us make informed connections and predictions between data sets.

Marketing data is the big new thing, but from the chatter, you would believe that the front line of marketing analytics sits within the walled gardens of big data creators like Google, Facebook, Amazon or Uber. These businesses have colossal amounts of user data, detailing users' every interaction with their services in minute detail. There is, to be sure, massive amounts of interesting and useful work to be done on these data sets. These granular, varied and high-quality data resources are a wonderful training ground for imaginative and motivated data scientists, and some of the more interesting problems relate to marketing and advertising. For example, data scientists within the walled gardens can work on marketing problems like:

- How do I make better recommendations based on people's previous product/service usage?

- How do I find meaningful user segments based on behavioural patterns?

- How do I build good tests for user experience features, offers, pricing, promotions, etc?

- How do I allocate resources, inventory, etc., to satisfy as many users as possible?

All of which is analytically interesting and important, not to mention a big data engineering challenge. But if you're a data scientist and particularly interested in marketing, are these the most interesting problems?

I don't think they are.

These are big data problems, but they are still small domain problems. Think about how much time on average people spend in a single data ecosystem (say, Facebook or Amazon), and the diversity of the behaviours they exhibit there. You are analysing a tiny fraction of someone's behaviour; worse, you are trying to build predictive models from the slice of life that you can observe in minute detail. If you work in operations or infrastructure, almost all the data you need sits within the platform. But if you are doing marketing analytics, swimming in the deep but small pool of a single data lake can cause a serious blind spot. How much of someone's decision to buy something rests on the exposure to those marketing experiences that you happen to have tracked through that data set?

As a marketing scientist you have an almost unique opportunity among commercial data scientists: to build the most complete models of people's decision-making in the marketplace. Think about the last thing you bought: now tell me why you bought it. The answer is likely to be a broad combination of factors… and you're still likely to miss out some of the more important ones. As marketing scientists we're asked to answer that question, on a huge scale, every day in ways that influence billions of dollars of marketing investment.

We need bigger models, not just bigger data

Marketing analytics is a data science challenge unlike most others, because it forces you to work across data sets, often of very different types. The machine learning models we build have to be granular enough to allow tactical optimisation over minutes and hours, and robust enough to sustain long-range forecasts over months and years.

The kinds of questions we get to answer include:

- What is the unique contribution of every marketing touchpoint to sales/user growth/etc?

- Can we predict segment membership or stage in the customer journey based on touchpoint usage? How do we predict the next best action across the whole marketing mix?

- How do touchpoints interact with each other or compete?

- Are there critical upper and lower thresholds for different types of marketing investment?

- How sensitive are buyers to changes in price? What other non-price features would get me the same result as a discount if I changed them?

- How important is predisposition towards certain brands or suppliers? What is the cost and impact of changing this vs making tactical optimisations while people are in the market?

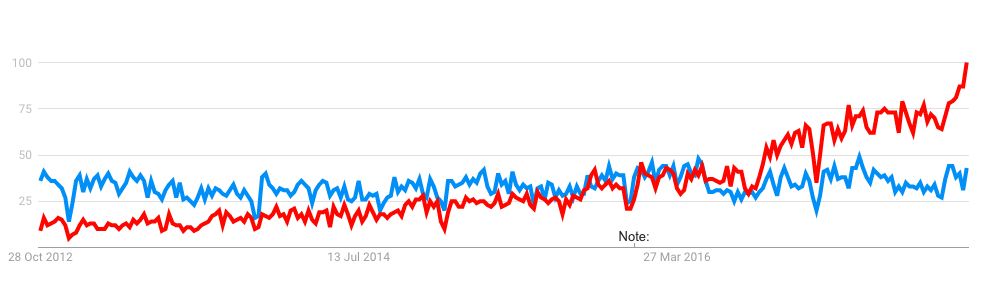

Yet we have a massive, pervasive blind spot. We are almost all acting as if marketing science applied only to digital channels. Do a quick Google for terms like 'automated media planning' or 'marketing optimization'. Almost all of the papers and articles you will find are limited to digital channels and programmatic/biddable media. I have had serious, senior people look me in the eye and tell me there is no way to measure the impact of brand predisposition on market share, no way even to account for non-direct-response marketing touchpoints like television, outdoor advertising or event marketing. This is, of course, wrong.

Everywhere you look, there is an unspoken assumption that the whole marketing mix is just too complicated to be analysed and optimised as a whole – that the messy, complex landscape of things people see, from telly ads to websites to shelf wobblers, needs to be simplified and digitised before we can make sense of it. It's little surprise that this idea, that anything not digital is not accountable, is projected loudest by businesses who make their money from digital marketing.

Again, this is an engineering myth, not a data science reality. Engineers, rightly, look at disunited data sets and see an integrity problem that can be fixed. Data scientists should (and I hope do) look at the same data sets and see a probability problem worth solving. The truth is that it is possible to use analytics and machine learning to build models that incorporate every marketing touchpoint, and show their impact on business results. The whole of media and marketing planning is a science and can be done analytically – not just the digital bits. Those who claim otherwise are trying to stop you from buying a car because all they know how to sell you is a bicycle.

This is the part that makes people uncomfortable – because it requires a more sophisticated data science approach. Being smart within a single data set is relatively easy – getting access to the data is a major engineering problem, but the data science problems are only moderately hard. As a data scientist within a single walled garden, it's easy to feel a sense of completeness and advantage, because only you have access to that data. Working across data sets, building models for human behaviour within the whole marketplace, needs a completely different mindset. There is no perfect data set that covers everything from the conversations people have with their friends to the TV programmes they watch to the things they search for online to the products they see in the shops – yet we need to build models that account for all of this.

Probability beats certainty… but it's harder

Making the leap from in-channel optimisation to cross-channel data science means having a better understanding of the fundamentals of probability theory and the underlying patterns in data. For example, I've built models that predict the likelihood that people searching for a brand online have previously heard adverts for the brand on the radio, and the optimum number of times they should have heard it to drive an efficient uplift in conversion to sales. If I had a data set that somehow magically captured individuals' radio consumption, search behaviours and supermarket shopping, this would be a large data engineering problem (because there'd be loads of data) but a trivial data science problem (because I'd just be building an index of purchasing rate for exposed listeners vs a matched control set of unexposed, etc.). This is the kind of analysis that research and scanning panel providers have been doing for decades - it's only the size of the data set that's radically new.

But of course, that data set doesn't exist. It's unlikely it'll ever exist, because the cost of building it would be far in excess of the commercial interests of any business. (Nobody is in the 'radio, online search and grocery shopping' industry… at least not yet. Amazon, I'm looking at you.) So what do we do?

The engineering response is to try and build the data set. This is a noble pursuit, but it can lead to an engineering culture response, which is to try and change human behaviour so that people only do things that can be captured within a single data set. An engineering culture will try to persuade advertisers to shift their spend from radio to digital audio, and their shopping from in-store to online, because then you can track all the behaviours, end to end. So measurement becomes trivial - it's just that, to achieve it, you've had to completely change human behaviour and marketing practice, and build a server farm the size of the moon to capture it all.

The data science response is to look at it probabilistically - to create, for example, agent-based simulations of the whole population based on the very good information we have about the distribution of occurrence of radio listening, online search and supermarket shopping. To do this, you need to be able to master the craft of fitting statistical models without overfitting them - building a model of exposure and response that is elegant, both matching reality but capable of predicting future change and dealing with uncertainty. When you do this, it's possible to build very sophisticated models that give a good guide to how the whole marketing mix influences present and future behaviour, without trying to coerce everything into a single data set.

Data science cultures are vastly better suited to transforming the future of marketing than engineering cultures. They see ambiguity as a challenge rather than an error, and they look hard for the underlying patterns in population-level data. They build models that focus on deriving greater total value from the marketing mix, through simulation and structural analysis across data sets, rather than just deterministic matching of individual-level identifiers. With apologies to Michelle Obama: when they go deep, we go wide.

Data science cultures may not be where you think

Marketing needs to change, and data is going to be fundamental to that change, as everybody has been saying for years. The discipline needs to be treated as a science, and the agencies, consultancies and platforms that want to survive in the next decade need to make a meaningful investment in technology, automation and product.

But while everybody is looking to the engineering cultures of Silicon Valley for salvation, I think the real progress is going to be made by data science cultures - the organisations that combine expertise in statistical data science, data fusion, research and software development, to create meaning and value in the absence of data integrity. Google, to its credit, is one of these. Some of the best original statistical research on the fundamental maths of communications planning is being done in the research group led by Jim Koehler and colleagues.

My employer, GroupM (the media arm of WPP), is another. Over the last few years we've quietly built up the largest single repository of consumer, brand and market data anywhere, of which the big single-source data sets are just one part. We are in the early years of throwing serious data science thinking at that data, building models and simulations for market dynamics that no single data set could hope to capture. Some of the other big media holding companies have strong data science cultures and impressive talent. There are a handful of funded startups, too - but vanishingly few, relative to the tidal wave of investment behind data engineering firms and single-source data platforms.

This is a deeply unfashionable thing to suggest, but a lot of the most advanced marketing science work is being done in media companies and marketing research firms, not in technology companies. There are two reasons for this. First, the media business model has supported a level of original R&D work for most of the last decade, even if it's not always been turned systematically into product. Second, media companies and agencies are ultimately accountable for what to invest, where, how much, and when - the kind of large-scale questions that can't be solved simply by optimising each individual channel to the hilt. (On a personal note, this is why I moved from digital analytics into media four years ago - the data is more diverse, the problems harder and more interesting.)

While everybody is focusing on the data engineering smarts of the Big Four platforms, keep an eye on the data science cultures who are transforming a huge breadth of data into new, sophisticated ways of predicting marketing outcomes. And if you're a data scientist interested in marketing, look for the data science cultures not just the engineering ones. They're harder to find because money and fame aren't yet flowing their way… but they have a big chance of transforming a trillion-dollar industry over the next few years.

# Alex Steer (04/01/2019)

(Source:

(Source: