2169 words | ~11 min

Right then. It's been almost a year since I last posted here - a year in the life of my agency, Maxus, that I look forward to talking about in more detail in future when the paint has dried. (Short version: IPA Effectiveness awards; I became CSO; restructure and retraining; building new cross-media planning tech; best agency in Top 100 Companies to Work for list; big new business win; merger with MEC to become Wavemaker as of Jan 2018.)

For now, a few notes on an idea that sits behind an increasing amount of what we do, and talk about, as an industry: optimisation.

First, a quick definition. Optimisation is the use of analytical methods to select the best from a series of specified options, to deliver a specified outcome. These days, a lot of optimisation is the automated application of analytical learning. I wrote a long piece last year on some of the basic machine learning applications: anomaly detection, conditional probability and inference. Optimisation can take any of these types of analysis as inputs, and an optimiser is any algorithm that makes choices based on the probability of success from the analysis it has done. Optimisation crops up in all sorts of marketing applications, that we tend to discuss as if they were separate things:

- Programmatic buying

- Onsite personalisation

- Email marketing automation

- AB and multivariate testing

- Digital attribution

- Marketing mix modelling

- Propensity modelling

- Predictive analytics

- Dynamic creative

- Chatbots

...and so on, until we've got enough buzzwords to fill a conference. All of these are versions of optimisation, differently packaged.

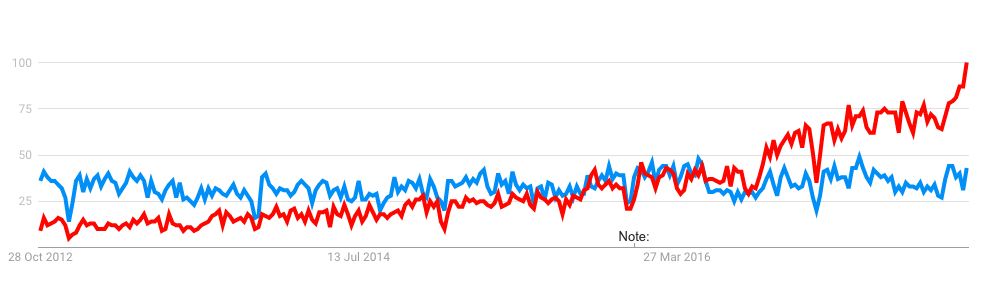

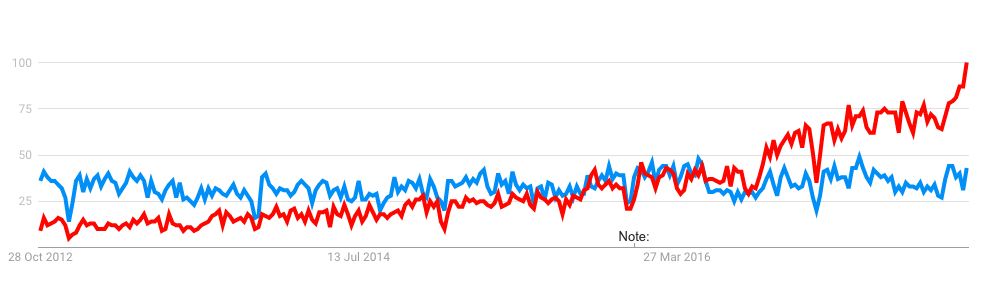

When I say optimisation 'sits behind' a lot of what we do in marketing and media today, it's because optimisation is almost the opposite of an industry buzzword: a term that has remained surprisingly constant in marketing discourse over the last few years, while its application has broadened considerably. By way of lightly-researched reference, here are Google search volume trends for 'optimisation' and 'machine learning' in the UK over the last five years (it makes little difference, by the way, if you search for the US or UK spelling):

Search volumes for optimisation (blue) have remained fairly constant over the last half-decade (and are driven mainly by 'search engine optimisation'), whereas 'machine learning' (red) has risen, and crossed over in early 2016. I show this as just one cherry-picked example of a tendency for marketing language to imply that there is more innovation in the market that actually exists. We can see this more clearly by looking at the phenomenon of hype clustering around machine learning.

Hype clustering

Let's look back at the Gartner Hype Cycle, the canonical field guide to febrile technology jargon, from July 2011:

We can see a good distribution of technologies that rely on optimisation, all the way across the cycle: from video analytics and natural-language question answering at the wild end, to predictive analytics and speech recognition approaching maturity.

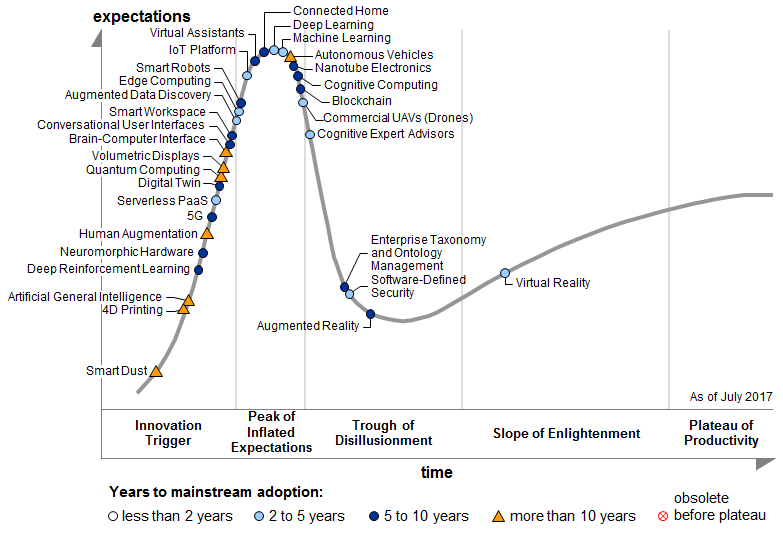

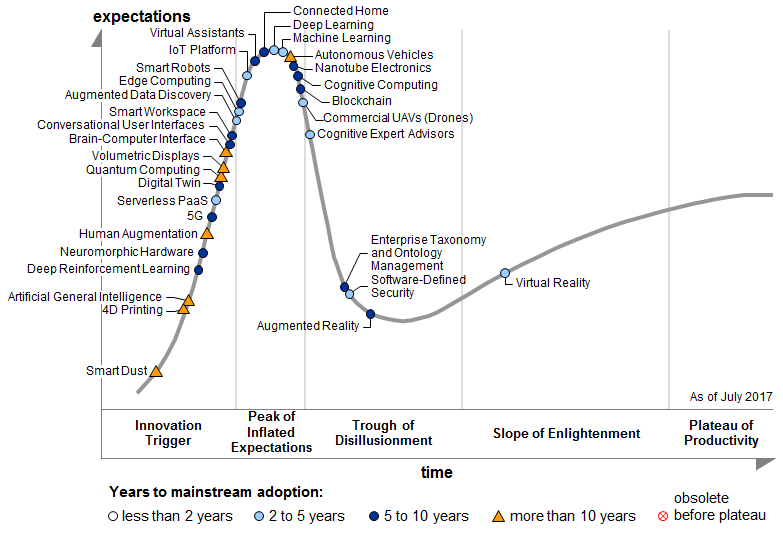

Fast forward six years to the most recent hype cycle from July 2017:

'Machine learning' and 'deep learning' have found their way to the top of the hype curve... while everything else on the list has disappeared (except the very-far-off category of 'artificial general intelligence'). Fascinatingly, machine learning is predicted to reach maturity within 2-5 years, whereas some of the technologies previously on the list six years ago were predicted to have matured by now. In other words, several of the technologies that were supposedly past the point of peak hype in 2011 are now back there, but rechristened under the umbrella of machine learning.

Machine learning is a classic piece of hype clustering: it combines a lot of analytics and technical methods that are themselves no longer hypeworthy, with a few that are still extremely niche. The result is something that sounds new enough to be exciting, wide-ranging enough to be sellable to big businesses in large quantities - very much the situation that big data was in when it crested the hype cycle in 2013.

Sitting behind a lot of 'machine learning' is good old-fashioned optimisation, albeit increasingly powered by faster computing and the ability to run over larger volumes of data than a few years ago. Across digital media, paid search, paid social, CRM, digital content management and ecommerce, businesses are beginning to rely hugely on optimisation algorithms of one sort or another, often without a clear sense of how that optimisation is working.

This is, it won't surprise you to learn, hugely problematic.

Doing the right thing

Optimisation is the application of analysis to answer the question: how do I do the right thing? Automated mathematical optimisation is a very elegant solution, especially given the processing firepower we can throw at it these days. But it comes with a great big caveat.

You have to know what the right thing is.

In the disciplines where automated optimisation first sprung up, this was relatively straightforward. In paid search advertising, for example, you want to match ad copy to keywords in a way that gets as many people who have searched for 'discount legal services' or 'terrifyingly lifelike clown statues' to click on your ads as possible. In ecommerce optimisation, you want to test versions of your checkout page flow in order to maximise the proportion of people who make it right through to payment. In a political email campaign, you want as many of the people on your mailing list to open the message, click the link and donate to your candidate as possible. In all of these, there's a clear right answer, because you have:

- a fixed group of people

- a fixed objective

- an unambiguous set of success measures

Those are the kinds of problems that optimisation can help you solve more quickly and efficiently than by trial and error, or manual number-crunching

The difficulty arises when we extend the logic of optimisation, without extending the constraints. In other words, when we have an industry that is in love with the rhetoric of analytics and machine learning, that will try and extend that rhetoric to places where it doesn't fit so neatly.

False optimisation

Over the last few years we've seen a rush of brand marketing budgets into digital media. This is sensible in one respect as it reflects shifting media consumption habits and the need for brands, at a basic level, to put themselves where their audiences are looking. On the other hand, it's exposed some of the bad habits of a digital media ecosystem previously funded largely by performance marketing budgets, and some of the bigger advertisers have acknowledged their initial naivety in managing digital media effectively. Cue a situation where lots of brand marketers are concerned about the variability of the quality of their advertising delivery, especially the impact of digital's 'unholy trinity' of brand safety, viewability and fraud.

And what do 'worry about variability' plus 'digital marketing' equal? That's right: optimisation.

Flash forward and we find ourselves in a marketplace where the logic of optimisation is being sold heavily to brand marketers. I've lost count of the number of solutions that claim to be able to optimise the targeting of brand marketing campaigns in real time. The lingo varies for each sales pitch, but there are two persistent themes that come out:

- Optimising your brand campaign targeting based on quality.

- Optimising your brand campaign targeting based on brand impact.

Both of these, at first look, sound unproblematic, beneficial, and a smart thing to do as a responsible marketer who wants to have a good relationship with senior management and finance. Who could argue with the idea of higher-quality, more impactful brand campaigns?

The first of them is valid. It is possible to score media impressions based on their likely viewability, contextual brand safety, and delivery to real human beings in your target audience. While the ability to do this in practice varies, there is nothing wrong with this as an aim. It can be a distraction if it becomes the objective on which media delivery is measured, rather than a hygiene factor; but this is just a case of not letting the tail wag the dog.

The second looks the same, but it isn't, and it can be fatal to the effectiveness of brand advertising. Here's why.

Brand advertising, if properly planned, isn't designed towards a short-term conversion objective (e.g. a sale). Rather, it is the advertising you do to build brand equity, that then pays off when people are in the market for your category, by improving their propensity to choose you, or reducing your cost of generating short-term sales. In other words, brand advertising softens us up.

Why does this matter? Because optimisation was designed to operate at the sharp end of the purchase funnel (so to speak) - to find the option among a set that is most likely to lead to a positive outcome. When you apply this logic to brand advertising, these are the steps that an optimiser goes through:

- Measure the change in brand perception that results from exposure to advertising (e.g. through research)

- Find the types of people that exhibit the greatest improvement in brand perception

- Prioritise showing the advertising to those types of people

Now, remember what we said earlier about the three golden rules of optimisation:

- a fixed group of people

- a fixed objective

- an unambiguous set of success measures

Optimising the targeting of your brand advertising to improve its success metrics violates the first rule.

This is what we call preaching to the nearly-converted: serving brand advertising to people who can easily be nudged into having a higher opinion of your brand.

It is false optimisation because it confuses objectives with metrics. The objective of brand advertising is to change people's minds, or confirm their suspicions, about brands. A measure for this is the aggregate change in strength of perception among the buying audience. DIagnostically, research can be used to understand if the advertising has any weak spots (e.g. it creates little change among older women or younger men). But a diagnosis is not necessarily grounds for optimisation. If you only serve your ads to people whose minds are most easily changed, you will drive splendid short-term results but you will ultimately run out of willing buyers, by having deliberately neglected to keep advertising to your tougher prospects. It's the media equivalent of being a head of state and only listening to the advice of people who tell you you're doing brilliantly - the short-term kick is tremendous, but the potential for unpleasant surprise is significant.

Preaching to the valuable

The heretical-sounding conclusion is: you should not optimise the targeting of your brand campaigns.

Take a deep breath, have a sit down. But I mean it. You can optimise the delivery, by which I mean:

- Place ads in contexts that beneficially affect brand perceptions

- Show your ads only to people in your target buying audience (not to people who can't buy you, or to bots)

- Show better-quality impressions (more viewable, in brand-safe contexts)

- Show creative that gets a bigger response from your target audience

But do not narrow your targeting based on the subsets of your audience whose perceptions of you respond best. That is a fast track to eliminating the ability of your brand to recruit new buyers over time and will create a cycle of false optimisation where you not only preach to the converted, but you only say the things they most like to hear.

Brand advertising is the art of preaching to the valuable. It means finding out which people you need to buy your brand in order to make enough money, and refining your messaging to improve the likelihood that they will. Knowing that requires a serious investment in knowledge and analysis before you start, to find your most viable sources of growth and the best places and ways to advertise based on historic information. This is anathema to people who sell ad-tech for a living, for whom 'test and learn' is of almost theological importance, not least because it encourages more time using and tweaking the technology. The 'advertise first, ask questions later' approach looks like rigour in the heat of the moment (real-time data! ongoing optimisation!) but is the exact opposite.

Testing and learning is exactly the right approach when you have multiple options to get the same outcome from the same group of people. It is precisely the wrong thing to do if it leads to you changing which people to speak to. It's like asking out the girl/boy of your dreams, getting turned down, then asking out someone else using the same line, and thinking you've succeeded. Change the context, change the line, but don't change the audience.

(Source:

(Source: