1220 words | ~6 min

The Christmas/New Year period inevitably means three things. One, people blog less. Two, the sales start. Three, people start predicting trends.

So I'm going to kick-start this blog again after its holiday slump with a longish post talking about the end-of-year sales and the new-year trends; and in particular about a trend that seems to have a slightly lower profile than I think it deserves.

Believe it or not, the trend is that TV is becoming more important. And it's only really worth talking about it because so many of us have got sidetracked by computers and mobile over the last few years.

Ever since Black Friday (in the US), this year's winter sales story has been about the growth in online retail. This year online spending rose 15% to $36.4bn over the holiday season in the US, well above the overall projected spending rise (3.3%); and the top online retailers saw 42% more traffic than last year. The growth areas for online sales were computer hardware (23%), books and magazines (22%), consumer electronics (21%) and software (20%).

TV feels like the forgotten screen in the consumer electronics category. Read through JWT's excellent 100 Things to Watch for 2011 and you'll notice how much of it is about computing, and particularly mobile computing. There is one mention of TV, in a discussion on video calling. With TV barely featuring in such a comprehensive collection of forecasts, you won't be surprised to know that it's also absent in lots of smaller, more off-the-cuff predictions that do the rounds at this time of year.

Which is odd, as this is set to be a very good year for the big glowing box in the living room. It looks like 2010 saw 17% year-on-year growth in TV sales worldwide. Most of the growth is being driven by emerging markets, especially China, and by new technology going mainstream fast in mature markets. Shipments of LCD TVs grew 31% in 2010, but that adoption curve is already flattening, with 13% growth forecast for 2011.

The new thing for the new year will be internet TV, projected to account for a fifth of new TV shipments. Since this has the word 'internet' in it, there's predictably been lots of chatter about it, what with Google TV, Apple TV and what looks like a contender from Microsoft about to be shown off at CES in a couple of days' time.

Globally, though, the growth will be fuelled by the newly affordable, not just the new. There's a huge emerging market for TV, behind the US/UK technology curve but with a lot of collective spending power. In South Africa, for example, TV ownership by household rose from 53.8% to 65.6% between 2001 and 2007, and is catching up with radio ownership (76.6% in 2007). Of the 3.2 million South African subscribers to the DSTV pay-TV service, over 10% were added in the six months to September 2010, when DSTV introduced its lower-cost Compact package to attract new customers. There are lots of countries where TV has a lot more growth potential than in SA (I just don't have that data - sorry).

So TV is about to become very big in lots of new markets. In well established markets, TV is already big, but it's quietly becoming more important.

A couple of years ago, TV got a brief flurry of attention as part of that whole raft of stories about recession-proof consumer behaviour. Apparently, in late 2008 and early 2009, we were all spending more time at home to save money, so we were buying big new TVs in anticipation of spending less time going out and more time on the sofa.

That TV story came and went along with allotments, lipstick sales, hemlines, and other barometers of recessionistadom. That's a pity, as I think it masked something bigger. We're starting to pay a lot more attention to TV, and to demand more of it.

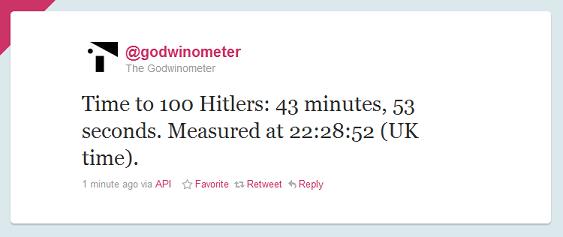

3D TV remains a gimmick, and sales have been disappointing. This is usually blamed (as in the link) on the lack of worthwhile 3D content, but my hunch is that 3D TV basically misjudges how we watch TV. Unless we're fanatical, most of the time we give TV continuous partial attention. It's one of the reasons we don't mind ad breaks on TV but hate them on streaming video, and why we'd walk out of the cinema if they interrupted the screening to show commercials. 3D TV assumes we give TV our full attention, so are happy to wear big plastic glasses while watching. We don't, so we aren't. We get up, walk round, chat to each other, make tea, play on our phones, and so on.

But 3D TV gets it slightly right even as it gets it wrong. It assumes we now want to give TV sets our full attention. We don't, but we are giving them more than we used to. We don't just want them to be good enough, on in the background unobtrusively, like radios with pictures.

I think TVs are turning into appointment-to-view technology, serving those occasions when we want to sit down and be entertained by one thing for an extended period. Hence the success of HD; of hard-disk recorders; of games consoles like the Wii, Kinect and XBox 360 whose natural home is the living room not the bedroom. 3D glasses are just a step too far in the right direction.

It's lasted too long to be a recessionary fluke. Perhaps it's because we have more, more portable devices (smartphones, tablets, laptops) that fulfil our continuous-partial-attention entertainment needs. We now expect TV to fill the niche that computers and mobile devices don't: something to entertain us, quite immersively, for specific periods of time, when we choose.

This obviously has implications for advertising, beyond the much-discussed impacts of recording and the new potential of internet TV to target ads. If we demand more of TV, we may be less forgiving of interruptive, uninteresting advertising. (Or maybe that's just wishful thinking.)

With the exception of 3D, which I reckon will soon be relegated to gaming and cinema (fully immersive screen entertainment), I'd bet that this will be a good year for the humble telly, and everything that plugs into it.